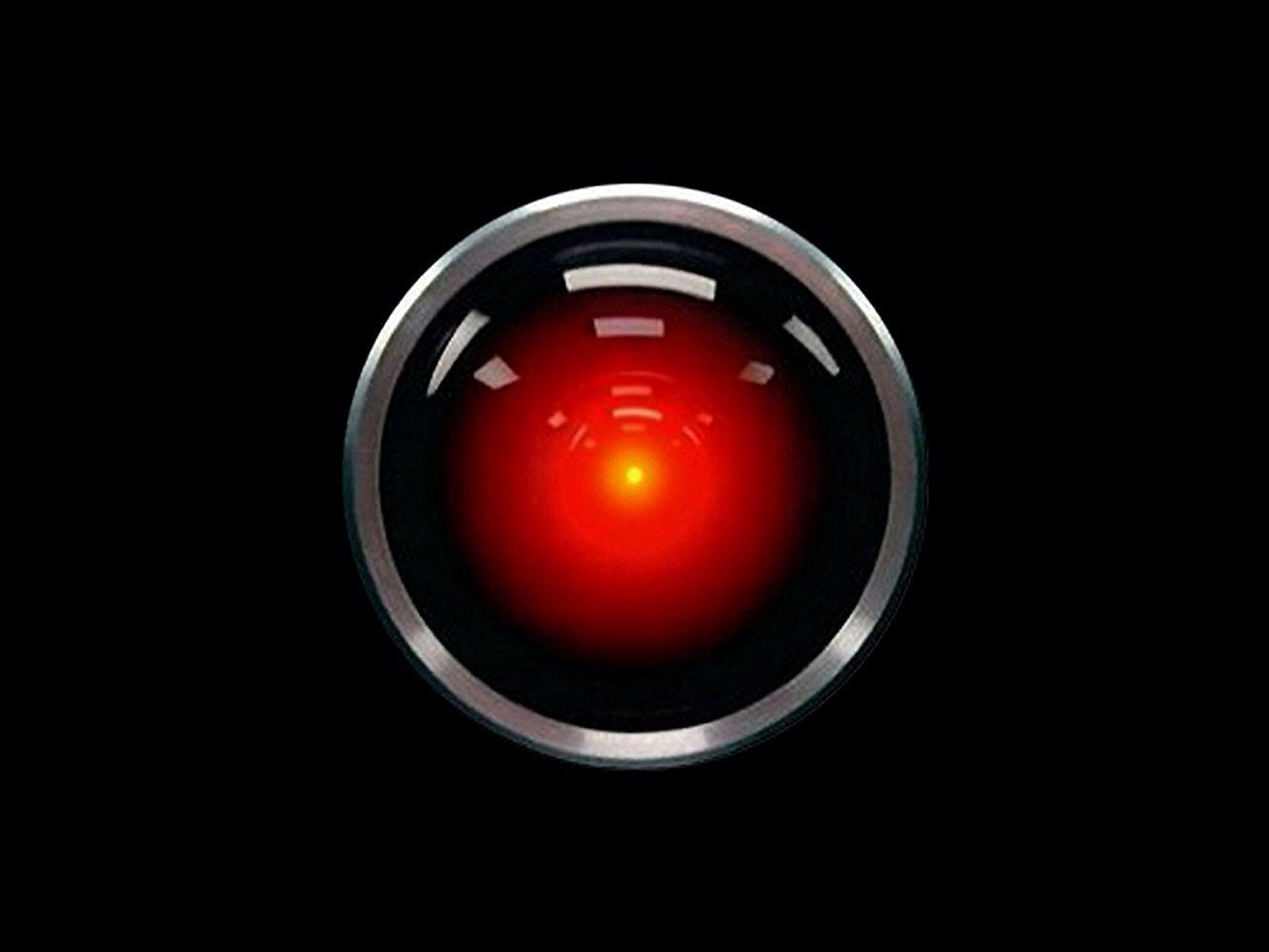

The Ghost Is Not In the Machine

The AI "person" we sense is only in our minds. But that's not necessarily a bad thing.

Many people who start using AI chatbots in daily life or at work begin to “feel” like the AI is a person. Not flesh and blood, but a person all the same. Why? It’s pareidolia. Our brains are wired to see faces and patterns. That kept us alive in the caveman days—spotting predators, prey, friends, foes. Today, it makes us see faces in clouds and holy fig…